Applying competitive data to improve comfort

Project Summary:

During the calibration of Navistar’s upcoming advanced driver assistance systems (ADAS) program, there was subjective feedback that our braking was unfavorable compared to competitors. To address this, I planned and executed a benchmarking exercise to compare our current product and upcoming product with two of our largest competitors.

My role in the project:

As the ADAS Lead for this study, I developed the test plan, scheduled vehicle and track time, collected and analyzed the data, and presented key findings to stakeholders. I worked closely with the ADAS technical specialist who guided technical hypotheses and drove during challenging test maneuvers.

Quantifying performance began with a comprehensive plan

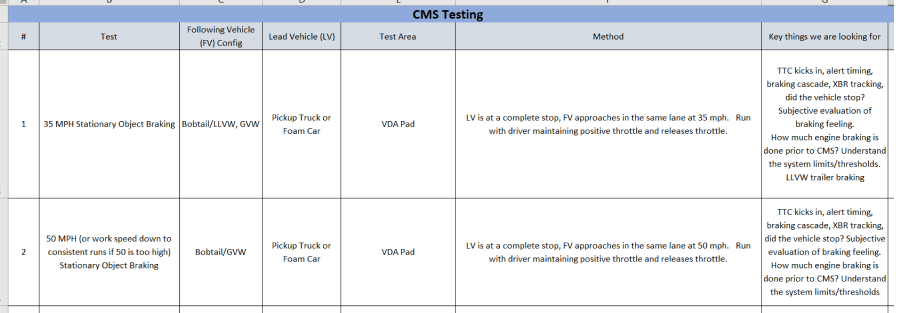

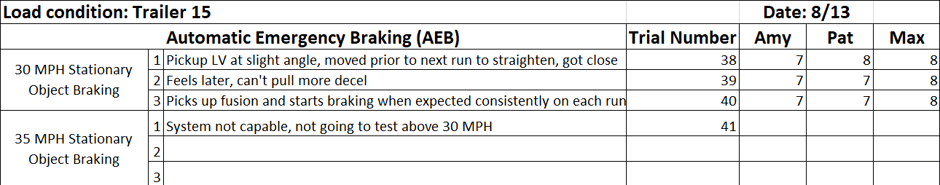

27 test maneuvers were included to simulate realistic driving use cases and to test vehicle performance compared to Navistar’s vehicle level ADAS requirements. Each testing maneuver had unique objectives in addition to a subjective evaluation score (aligned to the SAE J1441_201609 10 point scale).

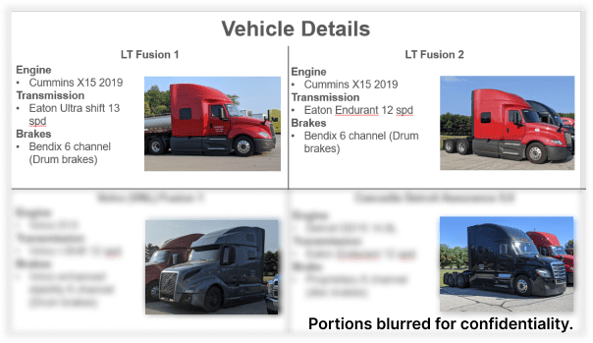

Four vehicles were included in this study: our current production truck, our development truck with our next generation system and two of our competitors. Truck tractor and trailer configurations were identified for each test. Truck performance for ADAS systems varies significantly between a heavy loaded trailer trailer (GVW), a lightly loaded trailer (LLVW), and without a trailer (bobtail).

Data collection spanned 4 weeks at our proving grounds

Each of the 4 vehicles was scheduled for one week and test cases were completed on the test track or test pad. Data was collected using CANalyzer which provides data for the hundreds of signals in the CAN bus. Subjective scores and comments were made after each trial run and documented in an Excel table.

Comparing stationary object braking between vehicles

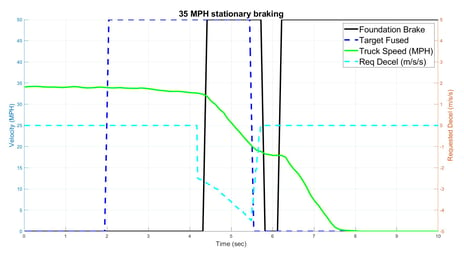

A variety of comparisons were made across ADAS features, but one that stood out was the braking cascades during automatic emergency braking (AEB) events. Brake cascades are the requested deceleration once camera and/or radar detects a target and determines that intervention is necessary. For this test, the test vehicle approaches a stationary vehicle at 30 MPH.

Determining brake cascades is a challenging balance between comfort and confidence. Tradeoffs have to be taken between stopping as quickly as possible and using more of the distance to stop closer to the lead vehicle. We also compared the time between when a target was detected (camera and radar fused), the brakes are request, and brakes are applied.

These brake cascade plots combined with subjective ratings led to updates to the braking cascade for our development system. Due to confidentiality these changes can't be disclosed.

Creating a process to automate the data processing

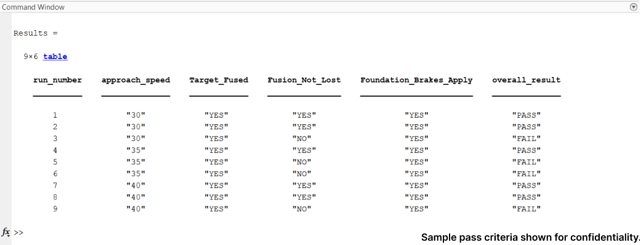

With large data sets that contain far more data points than are relevant to the event, it is time consuming to manually parse through test data. I created a Matlab script where all data sets, for stationary object braking events, can be uploaded and processed simultaneously. This outputs graphs of relevant signals for each run cropped to critical part and generates a corresponding summary table of which runs met our internal pass requirements.

Project Takeaways

It's critical to spend time in competitive products and understand their solutions during the development process. Often small changes can be made to our products guided by competitive solutions, this is especially valuable in trucking where drivers often switch between vehicles and have pre-established mental models based on other vehicles.

Vehicle testing is complicated and there are many variables that can impact results, but strong planning and documentation allow for better assessment of results.

Data processing is complex and time consuming, putting in the time to automate the processing will not only save time in the long run, but it can increase the amount of data that gets reviewed.